Core Features

This section provides a technical deep-dive into the key functionalities of AIGNE Hub. By the end, you will understand the platform's primary capabilities, from unified AI model interactions and provider management to robust security and detailed analytics, forming a solid foundation for leveraging the system.

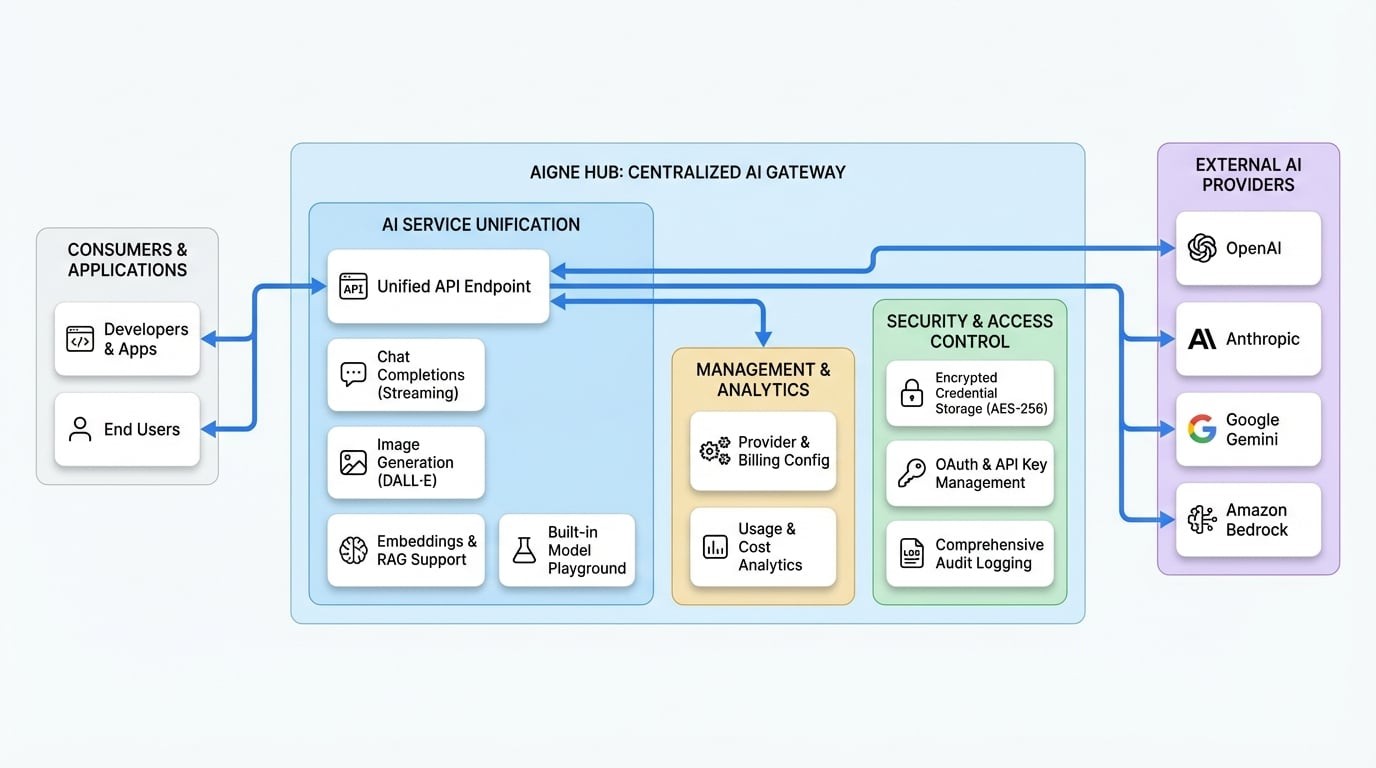

AIGNE Hub is engineered to serve as a central gateway, streamlining all interactions with a diverse range of Large Language Models (LLMs) and AI services. It unifies API access, centralizes security, and provides comprehensive visibility into usage and costs. The platform's features are designed to support both internal enterprise deployments and multi-tenant service provider models.

The following diagram provides a high-level overview of AIGNE Hub's architecture and its core components.

For more detailed information on specific features, please refer to the following sections:

AI Service Unification#

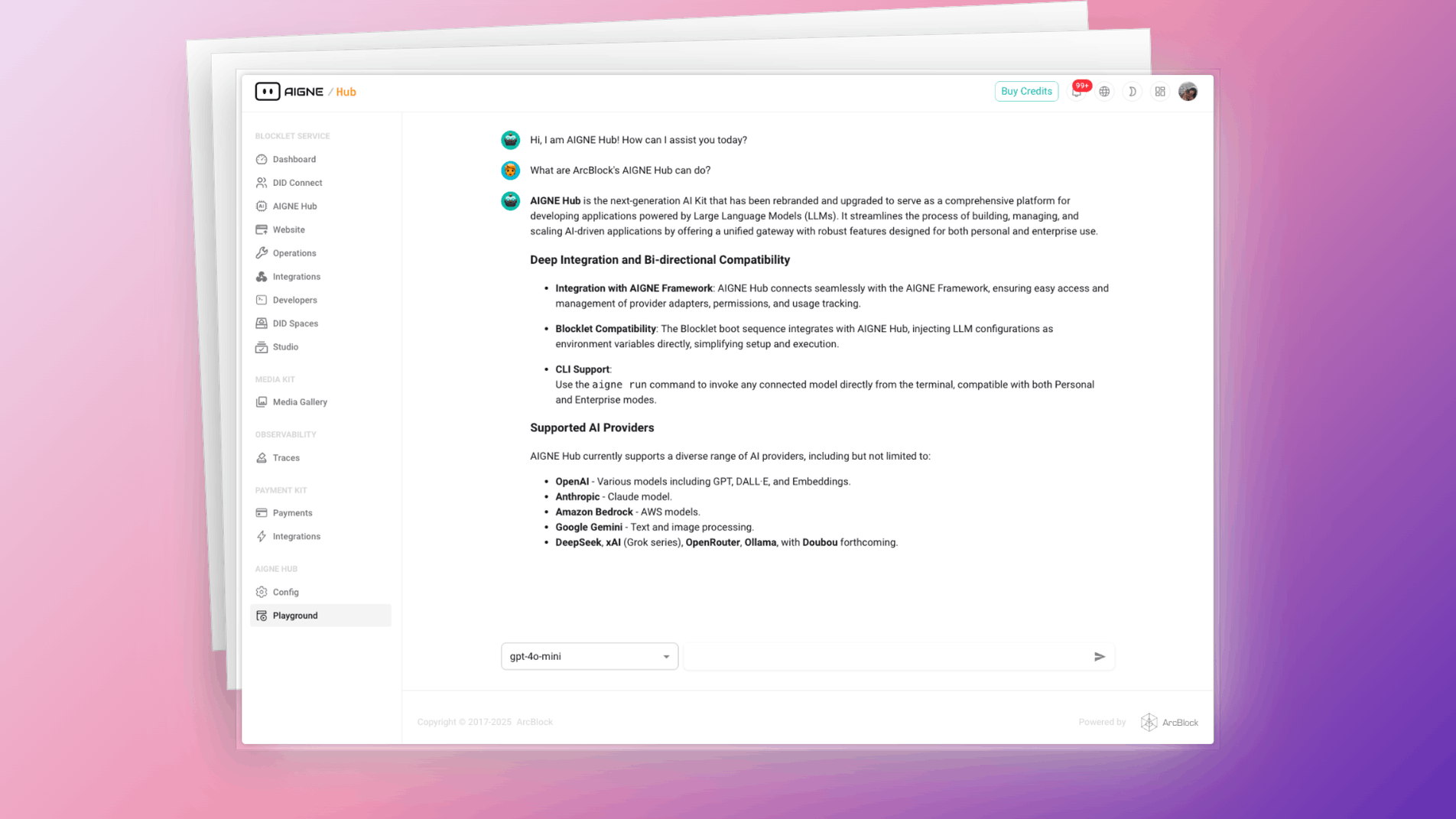

AIGNE Hub abstracts the complexity of integrating with multiple AI providers by offering a single, consistent set of API endpoints. This allows developers to build applications without being locked into a specific vendor and to switch between models seamlessly.

Core AI Capabilities#

The platform provides standardized access to the most common generative AI modalities:

- 💬 Chat Completions: Engage with conversational AI and advanced text generation models for a wide range of applications. The system supports standard and streaming responses through an OpenAI-compatible API.

- 🖼️ Image Generation: Access generative image models like DALL·E for AI-powered image creation and editing tasks.

- 🧠 Embeddings: Generate vector representations of text for use cases such as semantic search, clustering, and retrieval-augmented generation (RAG).

Built-in Model Playground#

AIGNE Hub includes an interactive playground for testing and experimenting with any connected AI model in real time. This tool is invaluable for prompt engineering, model comparison, and rapid prototyping without writing any code.

Centralized Management and Analytics#

Effective management and operational visibility are central to the AIGNE Hub design. The platform provides a unified dashboard to control, monitor, and analyze all AI-related activities.

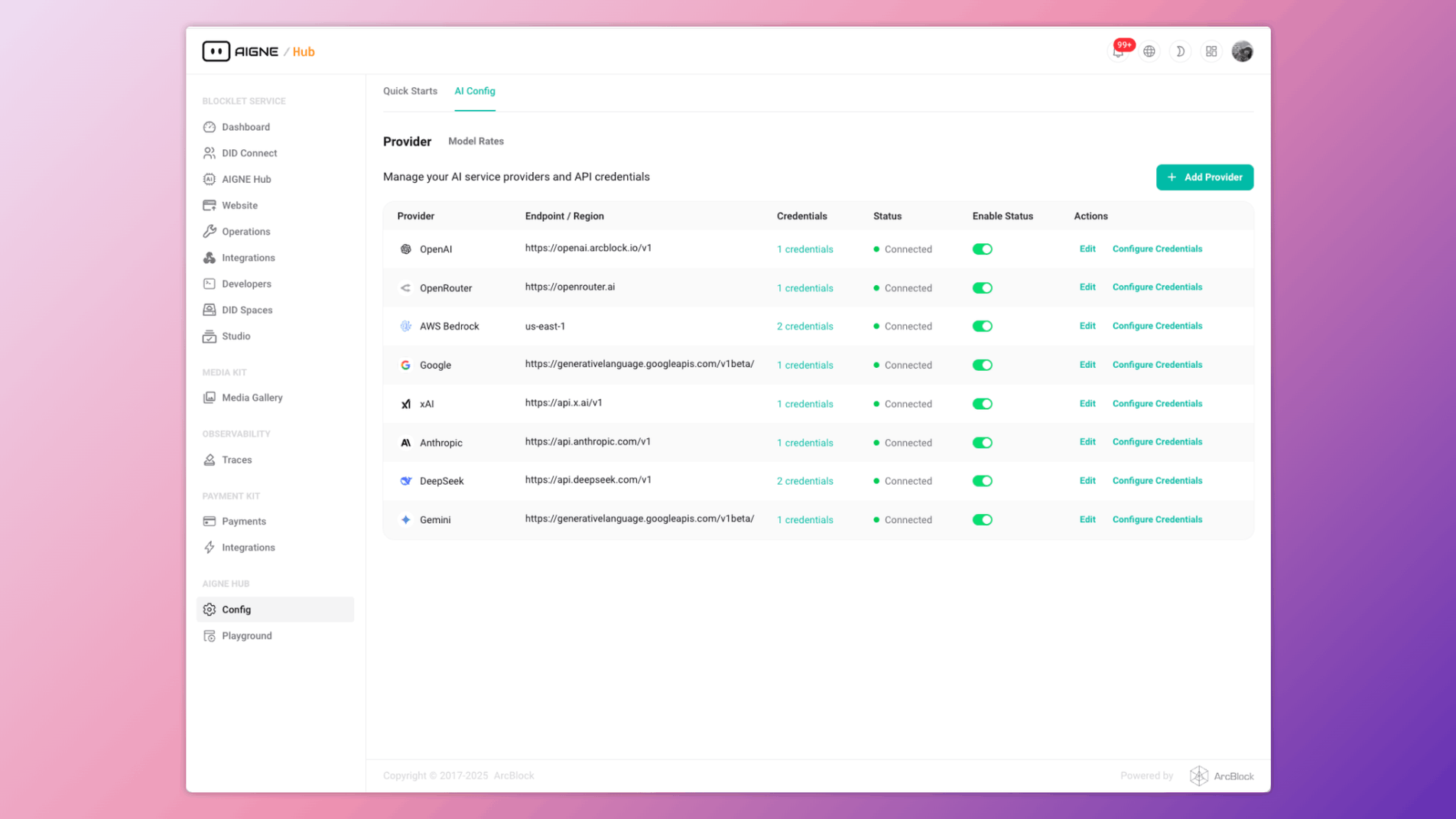

Provider and Billing Configuration#

From a single administrative interface, you can manage all aspects of the service.

- Provider Management: Connect to a growing list of AI providers, including OpenAI, Anthropic, Google Gemini, and Amazon Bedrock. Credentials are encrypted and stored securely.

- Flexible Billing System: Operate in two primary modes. For internal use, you can connect your own provider keys and pay them directly. For public-facing services, you can enable the credit-based billing system, set custom pricing rates, and monetize your AI gateway.

Usage and Cost Analytics#

The analytics dashboard offers deep insights into consumption and spending across all providers, models, and users.

- Usage Tracking: Monitor key metrics such as token consumption, API requests, and latency in real time.

- Cost Analysis: Track spending against your provider accounts or, in service provider mode, monitor revenue and credit consumption. This data is essential for budgeting, forecasting, and optimizing AI expenditures.

Security and Access Control#

AIGNE Hub is built with enterprise-grade security to protect sensitive data and ensure controlled access to powerful AI models.

- Encrypted Credential Storage: All upstream provider API keys and credentials are encrypted using AES-256 to prevent unauthorized access.

- OAuth Integration: Secure access for applications and users through industry-standard OAuth 2.0 protocols.

- API Key Management: Generate and manage API keys within AIGNE Hub, allowing for fine-grained control over application access.

- Audit Logging: A comprehensive audit trail logs all significant events, including API requests, configuration changes, and user activity, ensuring accountability and compliance.

Summary#

AIGNE Hub provides a comprehensive suite of features designed to unify, manage, and secure your organization's access to generative AI. By centralizing provider integrations, offering detailed analytics, and enforcing robust security measures, it serves as a critical infrastructure component for any team building with AI.

To continue, explore the detailed documentation for each core feature area:

- Provider Management: Dive into the specifics of connecting and configuring AI services.

- Usage & Cost Analytics: Learn how to leverage the analytics dashboard for operational insights.

- Security & Access: Understand the platform's security mechanisms in detail.